AI’s Creative Vision

Developing Unique Narrative Videos with ChatGPT-4, Zeroscope, ElevenLabs, Midjourney, and D-ID

At DBA, AI has become a vital part of our everyday processes, transforming the way we work and innovate. Inspired by this transformative power of AI and the numerous AI mashup videos going viral, I decided to venture into the relatively new domain of AI-assisted narrative video creation, taking on the dual role of producer and editor, but leaving as much of the creativity as possible to AI. I am going to outline how I interacted with AI’s creative abilities to generate parody videos inspired by Narnia and Twilight.

The Projects: Two Unique Teasers

The idea for the first video came from wanting to see a Narnia-like story depicted as a true crime documentary. The second video was a playful reimagination of what the Twilight series would look like if made by Christopher Guest. As a producer, my role was to guide the process while letting AI provide the actors, writing, direction, cinematography, and most casting decisions. It was an experiment to explore the potential of AI as a creative partner.

AI Creative Process: Writing

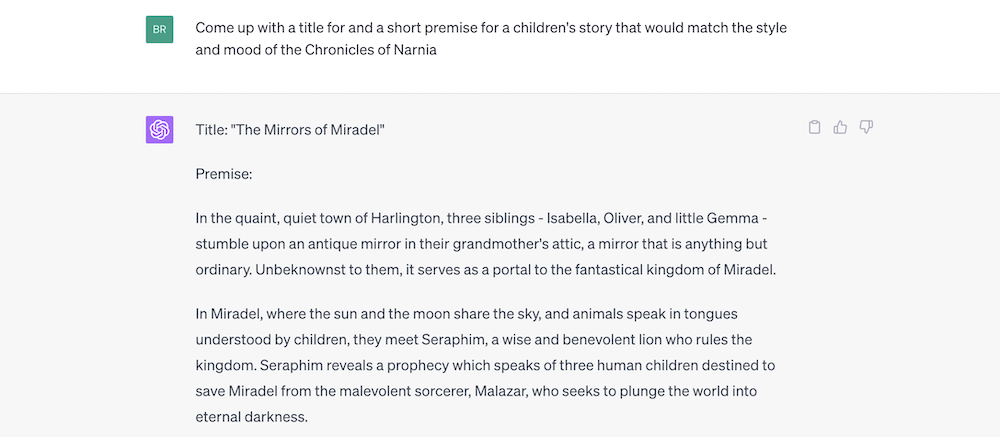

For both videos, I started with script creation using ChatGPT-4. This LLM helped brainstorm and draft the storyline. All I did was get the ball rolling, by asking what a Narnia-like story would look like as a true crime drama and what Twilight would look like if it were made by Christopher Guest. I set the idea into motion and then observed how the AI spun it into a narrative.

For the Narnia-inspired video, I used ChatGPT-4 to generate the title and premise of a children’s story akin to the Chronicles of Narnia. The result was a story titled “The Mirrors of Miradel” - a narrative involving three siblings, an antique mirror, and a magical kingdom. After finalizing the story, I worked with the AI to create a true crime documentary style teaser for this tale. My idea: if the Narnia stories actually happened, what would the John Walsh version be?

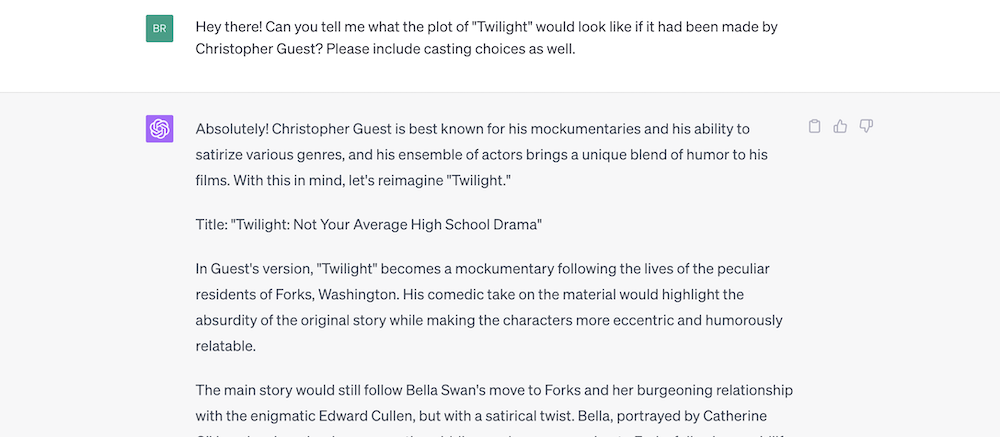

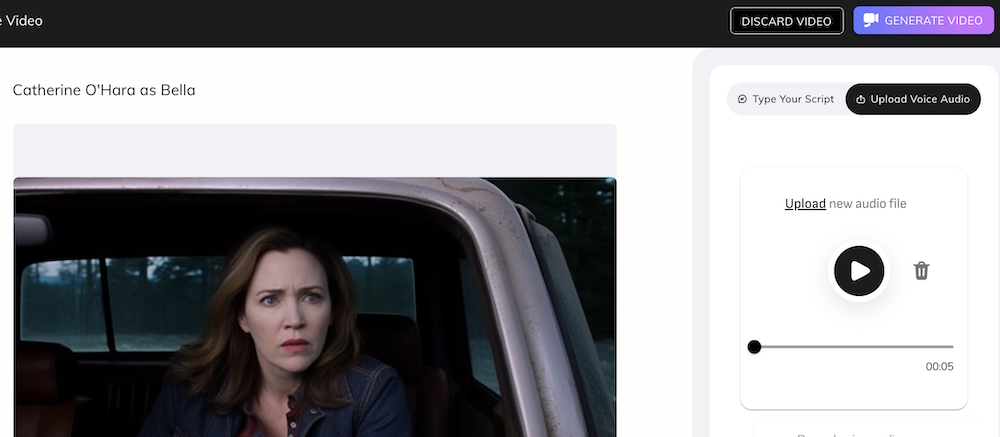

With the Twilight-inspired video, I first asked ChatGPT-4 about the cast and the overall storyline. The AI suggested Catherine O’Hara as Bella, Eugene Levy as Edward, and Christopher Guest as Jacob. However, I did decide to recast Fred Willard as Jacob because, honesty, that just seemed too ridiculous to pass up. ChatGPT-4 wrote the storyline and a bunch of one-liners for the cast, keeping in mind the mood of Christopher Guest. I then asked the AI to turn this storyline and some of the one-liners into a teaser trailer. This required some iterating, but no more creatively controlling than a producer requesting rewrites from a screenwriter.

AI Creative Process: Audio

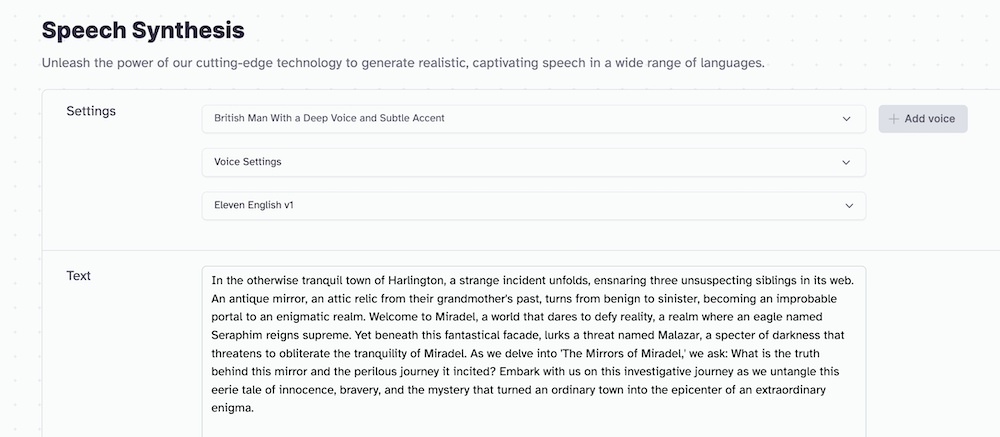

After scripting, I used ElevenLabs to create the voices for both videos. ElevenLabs provided the narration for the Narnia-inspired video and the voices of Bella, Edward, and Jacob for the Twilight video. For the Narnia narrator, I selected a previously generated voice. Creating the voices of the Twilight characters required a bit more iteration, including lowering the stability and just clicking generate until it got close enough.

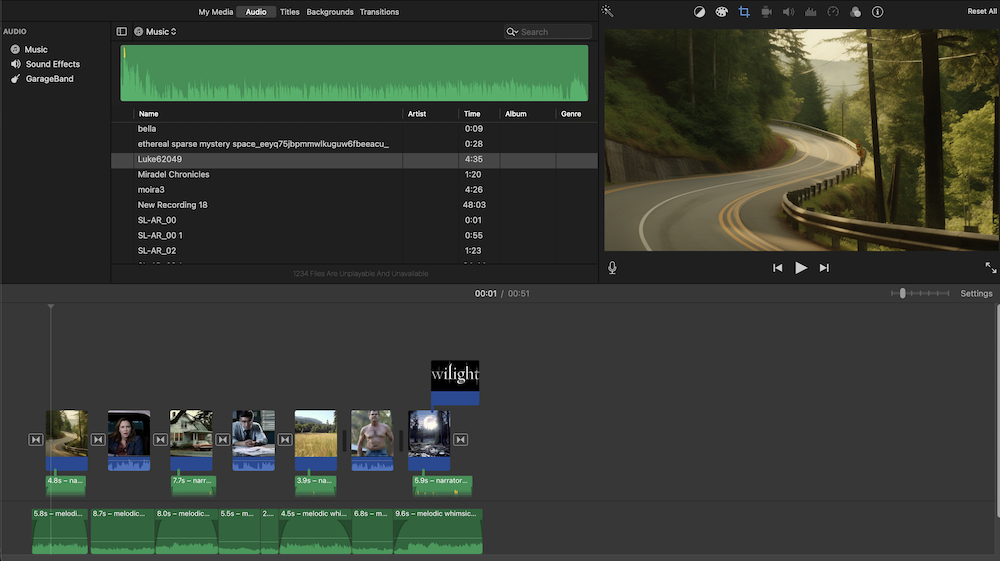

Zeroscope was my choice for creating the music. I asked ChatGPT-4 for music suggestions and used a Python script to ask Zeroscope to generate the corresponding music. For the Narnia-inspired video, the music was sparse and mysterious to fit the true crime documentary style, whereas, for the Twilight video, the music was a quirkier piano riff.

AI Creative Process: Visuals

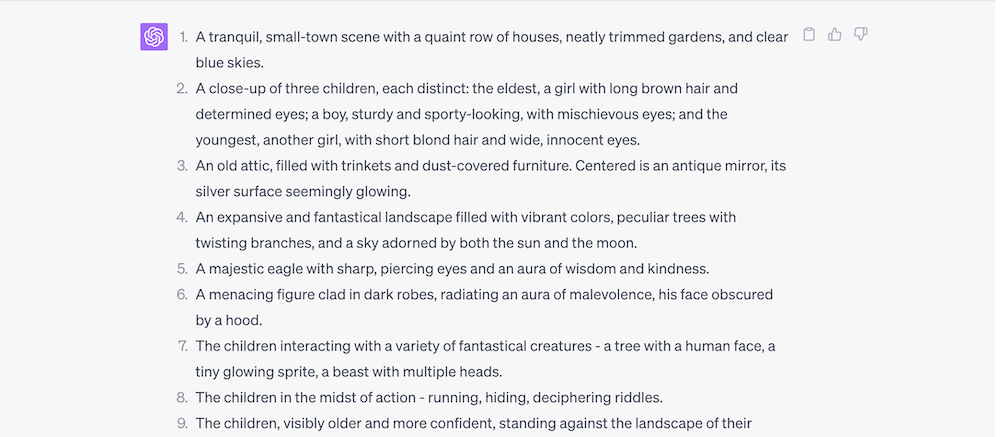

For the visuals of the Narnia-inspired video, I used Zeroscope to generate videos. I asked ChatGPT-4 to provide scene descriptions, and Zeroscope created the visuals based on these descriptions. In most instances, I did have to refine the prompts by adding specifics to get visuals more aligned with the AI’s script, but the root prompt still came from ChatGPT-4.

For the Twilight video, I used Midjourney to create images of the actors. ChatGPT-4 provided the image prompts, and after several iterations, I obtained the desired images. I then used D-ID to animate the images to look like they were speaking the lines generated by ElevenLabs.

My Contribution: Editing

The final stage involved combining the narrative, music, and visuals. Here, I used iMovie for both videos. The process involved arranging the clips, making slight adjustments to the narration and music, and exporting the final product. There was technically no AI involved in this step, but I tried to be as true as I could be to the AI’s creative vision, so to speak.

Conclusion: A Testament to AI’s Creative Potential

This experiment reinforced my belief in the creative potential of AI. Acting as a producer, I was able to guide the AI tools, doing my best to facilitate rather than influence the AI’s creative vision for the final product. The experience demonstrated that, while AI can provide the raw materials, the human touch is still needed to guide the process and make key decisions. The result was two unique videos that pushed the boundaries of what I thought was possible with AI.

By the way, much of this blog post, including its structure and key points, was co-developed through a dialogue with an AI, followed by heavy editing for specificity.

Resources